The default XML-based file format for Word 2019, Word 2016, Word 2013, Word 2010, and Office Word 2007.Ĭonforms to the Strict profile of the Open XML standard (ISO/IEC 29500). Stores Visual Basic for Applications (VBA) macro code. The XML-based and macro-enabled file format for Word 2019, Word 2016, Word 2013, Word 2010, and Office Word 2007. The binary file format for Word 97-Word 2003. The following table describes the file formats that are supported in Word, alphabetized by extension. Supported file formats and their extensions are listed in the following tables for Word, Excel, and PowerPoint.

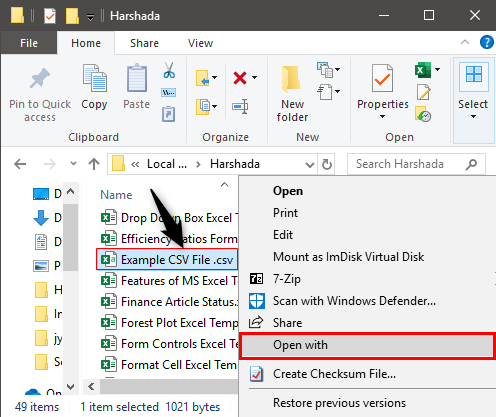

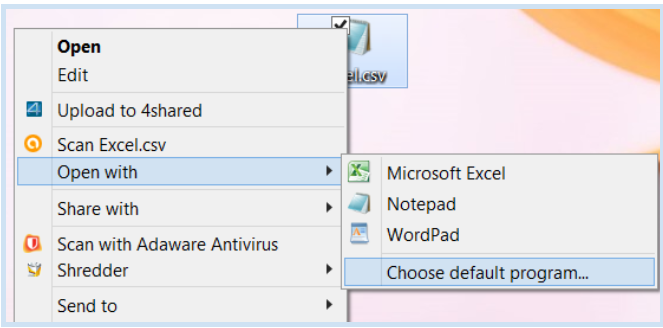

#Set default program to open csv how to

In this tutorial, you have learned how to read a CSV file, multiple csv files and all files from a local folder into Spark DataFrame, using multiple options to change the default behavior and write CSV files back to DataFrame using different save options.Applies to: Microsoft 365 Apps for enterprise, Office 2019, and Office 2016

Ignore – Ignores write operation when the file already exists, alternatively you can use SaveMode.Ignore.Įrrorifexists or error – This is a default option when the file already exists, it returns an error, alternatively, you can use SaveMode.ErrorIfExists.ĭf2.write.mode(SaveMode.Overwrite).csv("/tmp/spark_output/zipcodes") Overwrite – mode is used to overwrite the existing file, alternatively, you can use SaveMode.Overwrite.Īppend – To add the data to the existing file, alternatively, you can use SaveMode.Append. Spark DataFrameWriter also has a method mode() to specify SaveMode the argument to this method either takes below string or a constant from SaveMode class. Other options available quote, escape, nullValue, dateFormat, quoteMode. for example, header to output the DataFrame column names as header record and delimiter to specify the delimiter on the CSV output file. While writing a CSV file you can use several options. For detailed example refer to Writing Spark DataFrame to CSV File using Options. Use the write() method of the Spark DataFrameWriter object to write Spark DataFrame to a CSV file. Please refer to the link for more details. Once you have created DataFrame from the CSV file, you can apply all transformation and actions DataFrame support. add("EstimatedPopulation",IntegerType,true) If you know the schema of the file ahead and do not want to use the inferSchema option for column names and types, use user-defined custom column names and type using schema option. Reading CSV files with a user-specified custom schema Note: Besides the above options, Spark CSV dataset also supports many other options, please refer to this article for details. dateFormatĭateFormat option to used to set the format of the input DateType and TimestampType columns. For example, if you want to consider a date column with a value “” set null on DataFrame. Using nullValues option you can specify the string in a CSV to consider as null. but using this option you can set any character. When you have a column with a delimiter that used to split the columns, use quotes option to specify the quote character, by default it is ” and delimiters inside quotes are ignored. By default the value of this option is false , and all column types are assumed to be a string. This option is used to read the first line of the CSV file as column names. Note that, it requires reading the data one more time to infer the schema. The default value set to this option is false when setting to true it automatically infers column types based on the data. By default, it is comma (,) character, but can be set to pipe (|), tab, space, or any character using this option.

delimiterĭelimiter option is used to specify the column delimiter of the CSV file. Below are some of the most important options explained with examples. Spark CSV dataset provides multiple options to work with CSV files. We can read all CSV files from a directory into DataFrame just by passing the directory as a path to the csv() method. If you have a header with column names on file, you need to explicitly specify true for header option using option("header",true) not mentioning this, the API treats the header as a data record. and by default type of all these columns would be String.

This example reads the data into DataFrame columns “_c0” for the first column and “_c1” for second and so on. Val df = ("src/main/resources/zipcodes.csv") Using ("path") or ("csv").load("path") you can read a CSV file with fields delimited by pipe, comma, tab (and many more) into a Spark DataFrame, These methods take a file path to read from as an argument. Read CSV files with a user-specified schema.

0 kommentar(er)

0 kommentar(er)